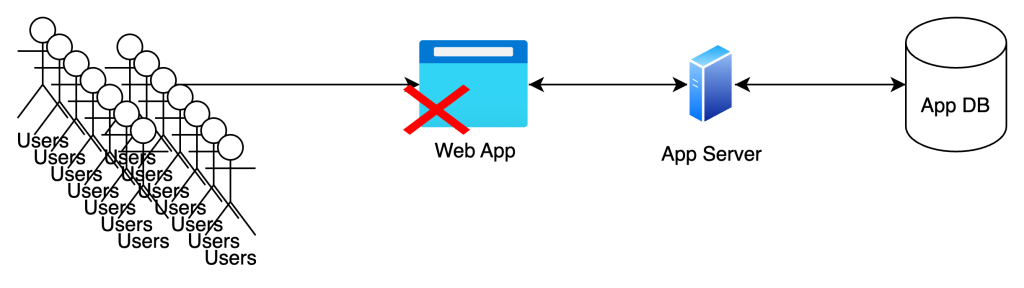

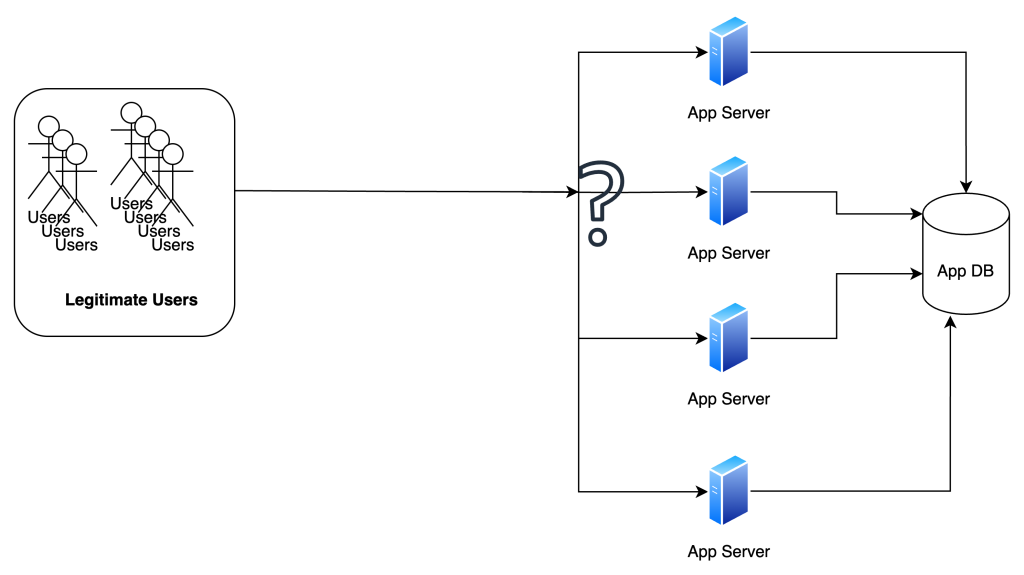

Let’s go back to our last simple system design-

Now It is all good, everything works, until,

Our users increase and overwhelm the web server 🙁

What’s Happening?

The humble small CPU is being overwhelmed with abundance of requests, being unable to quickly handle each, essentially losing ability to hold a connection, and then, crash and stop responding.

What to do next?

1. Recover From Failures

First of all, we need our servers to recover from such crashes automatically.

So we create a OS level service to run our app and also restart it in case of crashes.

A good example – https://pm2.keymetrics.io/

This is essentially doing the same job of getting our web server process back up and running in case of crash, every time, just like saying –

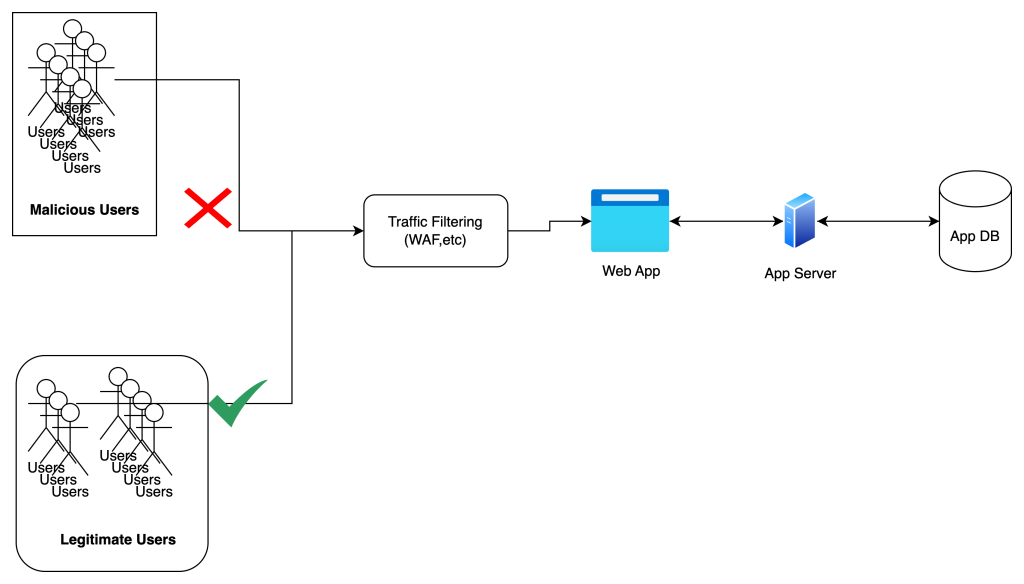

2. Are we under attack?

Such system load can more likely be a DoS (Denial of Service) or DDos (Distributed Dos) attack, so we need to ensure are these legit user load, or attack load.

In case it is an attack, we need to do either of these –

- Stay Idle – Believe in the universal forces and contemplate on the eternal truth “This too shall pass” – however this rarely helps so absolutely not recommended ❌🙅🏻♂️

- Identify and stop serving malicious requests via Web Application Firewall (WAF), IP filtering, forced re-login and mandate 2FA prompts System, or simply reissue access keys based on ID check and delay in approval.

Though these actions are more effective before an attack, rather than during one.

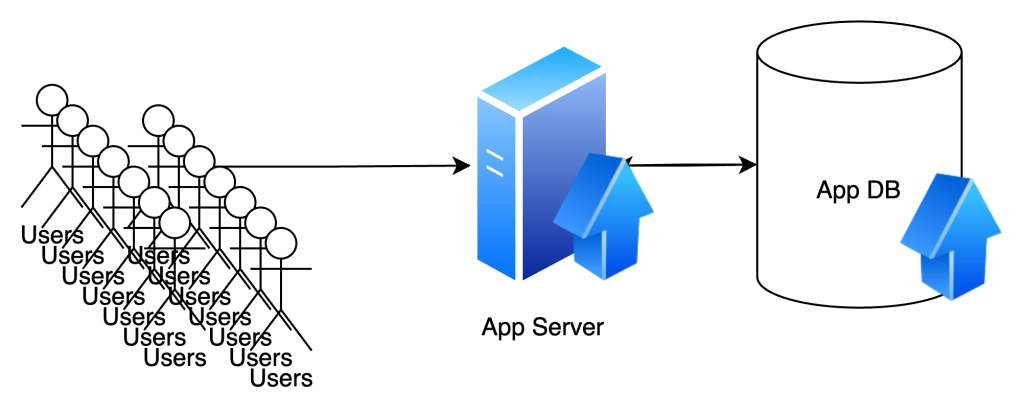

- Scale Up – Increase System Capacity

We can do this –

- By increasing specifications of the server system (Vertical Scaling). This approach has one problem – we can’t just keep increasing the system specs, there is hard limit to it!

Moreover, bumping up system specs gets expensive real quick, so be ready to get a mighty bill 😛

- By creating multiple instances of server and distribute the load across these instances (Horizontal Scaling). This Approach also has it’s own tradeoffs.

Yes it solves the issue of Vertical Scaling, by keeping expense in check and adding cheaper systems in our processing instance pool, but this creates a new problem – How to balance load among these instances?

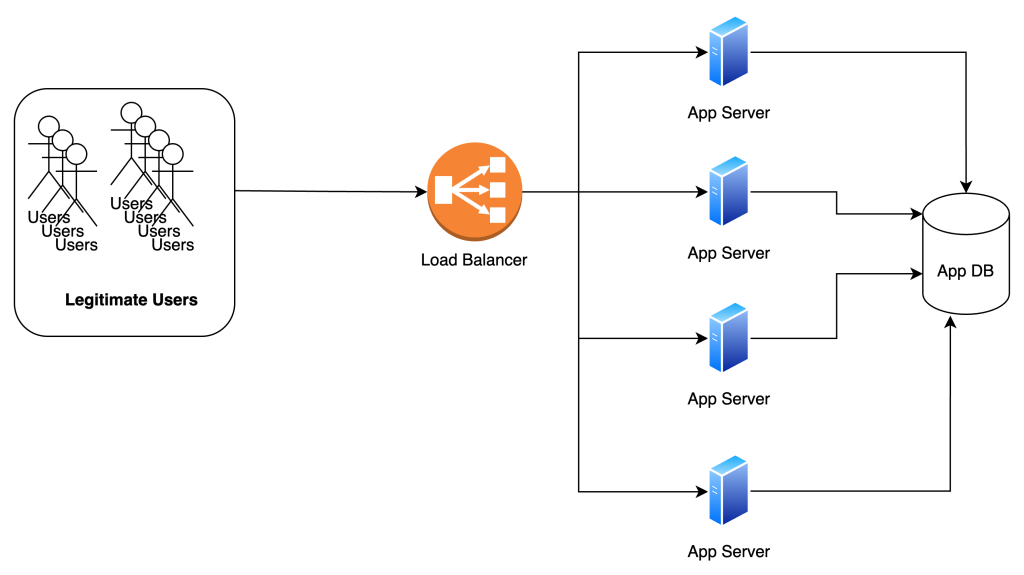

Ans: Load Balancer 🙂

A simple node in our design that focuses on the exact problem in hand – balancing load among multiple server instances.

Now our system looks far better, we have a highly available system, that we can easily scale as per our future needs, even though that means slower read and writes, as every request updates data on the same DB!

Well, that’s indeed bad, but hey we have a system up and running!

Even if not completely functional 😂

We will work on that in next one – Handling Trade-offs

Till then,

Stay Curious,

and Keep Learning! 😀

Peace,

Anmoldeep.